A Life Preserver for Staying Afloat in a Sea of Misinformation

As a science educator, my primary goals are to teach students the essential skills of science literacy and critical thinking. Helping them understand the process of science and how to draw reasonable conclusions from the available evidence can empower them to make better decisions and protect them from being fooled or harmed.

Yet while nearly all educators would agree that these skills are important, the stubborn persistence of pseudoscientific and irrational beliefs demonstrates that we have plenty of room for improvement. To help address this problem, I developed a general-education science course which, instead of teaching science as a collection of facts to memorize, teaches students how to evaluate the evidence for claims to determine how we know something and to recognize the characteristics of good science by evaluating bad science, pseudoscience, and science denial.

In my experience, science literacy and critical thinking skills are difficult to master. Therefore, it helps to provide students with a structured toolkit to systematically evaluate claims and allow for ample opportunities to practice. In previous semesters I’ve had excellent results with A Field Guide to Critical Thinking (Lett 1990), in which he summarized the scientific method with the acronym FiLCHeRS (Falsifiability, Logic, Comprehensiveness of evidence, Honesty, Replicability, and Sufficiency of evidence).

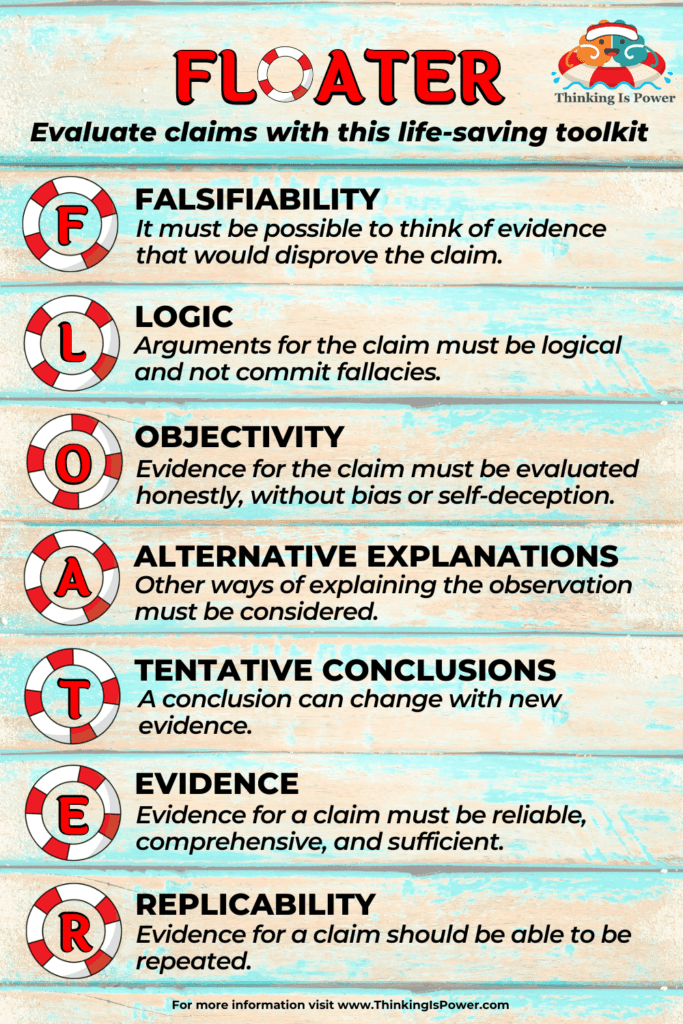

While FiLCHeRS has served my students well, I’ve found myself adding rules and updating examples to help my students navigate today’s misinformation landscape. The result is this guide to evaluating claims, summarized by the (hopefully memorable) acronym FLOATER, which stands for Falsifiability, Logic, Objectivity, Alternative explanations, Tentative, Evidence, and Replicability.

Think of FLOATER as a life-saving device. By using the seven rules in the toolkit we can protect ourselves from drowning in a sea of bad claims.

The foundation of FLOATER is skepticism. While skepticism has taken on a variety of connotations, from cynicism to denialism, scientific skepticism is simply insisting on evidence before accepting a claim, and proportioning the strength of our belief to the strength and quality of the evidence.

Before using this guide, clearly identify the claim and define any potentially ambiguous terms. And remember, the person making the claim bears the burden of proof and must provide enough positive evidence to establish the claim’s truth.

FALSIFIABILITY: It must be possible to think of evidence that would prove the claim false.

It seems counterintuitive, but the first step in determining if a claim is true is to try to determine if you can prove it wrong.

Falsifiable claims can be proven false with evidence. If a claim is false, the evidence will disprove it. If it’s true the evidence won’t be able to disprove it.

Scientific claims must be falsifiable. Indeed, the process of science involves trying to disprove falsifiable claims. If the claim withstands attempts at disproof we are more justified in tentatively accepting it.

Unfalsifiable claims cannot be proven false with evidence. They could be true, but since there is no way to use evidence to test the claim, any “evidence” that appears to support the claim is useless. Unfalsifiable claims are essentially immune to evidence.

There are four types of claims that are unfalsifiable.

- Subjective claims: Claims based on personal preferences, opinions, values, ethics, morals, feelings, and judgements.

For example, I may believe that cats make the best pets and that healthcare is a basic human right, but neither of these beliefs are falsifiable, no matter how many facts or pieces of evidence I use to justify them.

- Supernatural claims: Claims that invoke entities such as gods and spirits, vague energies and forces, and magical human abilities such as psychic powers.

By definition, the supernatural is above and beyond what is natural and observable and therefore isn’t falsifiable. This doesn’t mean these claims are necessarily false (or true!), but that there is no way to collect evidence to test them.

For example, so-called “energy medicine,” such as reiki and acupuncture, is based on the claim that illnesses are caused by out-of-balance energy fields which can be adjusted to restore health. However, these energy fields cannot be detected and do not correspond to any known forms of energy.

There are, however, cases where supernatural claims can be falsifiable. First, if a psychic claims to be able to impact the natural world in some way, such as moving/bending objects or reading minds, we can test their abilities under controlled conditions. And second, claims of supernatural events that leave physical evidence can be tested. For example, young earth creationists claim that the Grand Canyon was formed during Noah’s flood approximately 4,000 years ago. A global flood would leave behind geological evidence, such as massive erosional features and deposits of sediment. Unsurprisingly, the lack of such evidence disproves this claim. However, even if the evidence pointed to a global flood only a few thousand years ago, we still couldn’t falsify the claim that a god was the cause.

- Vague claims: Claims that are undefined, indefinite, or unclear.

Your horoscope for today says, “Today is a good day to dream. Avoid making any important decisions. The energy of the day might bring new people into your life.”

Because this horoscope uses ambiguous and vague terms, such as “dream,” “important”, and “might”, it doesn’t make any specific, measurable predictions. Even more, because it’s open to interpretation, you could convince yourself that it matches what happened to you during the day, especially if you spent the day searching for “evidence.”

Due to legal restrictions, many alternative medicine claims are purposefully vague. For example, a supplement bottle says it “strengthens the immune system,” or a chiropractic advertisement claims it “reduces fatigue.” While these sweeping claims are essentially meaningless because of their ambiguity, consumers often misinterpret them and wrongly conclude that the products are efficacious.

- Ad hoc excuses: These entail rationalizing and making excuses to explain away observations that might disprove the claim.

While the three types of claims described thus far are inherently unfalsifiable, sometimes we protect false beliefs by finding ways to make them unfalsifiable. We do this by making excuses, moving the goalposts, discounting sources or denying evidence, or proclaim that it’s our “opinion.”

For example, a psychic may dismiss an inaccurate reading by proclaiming her energy levels were low. Or, an acupuncturist might excuse an ineffective treatment by claiming the needles weren’t placed properly along the patient’s meridians. Conspiracy theorists are masters at immunizing their beliefs against falsification by claiming that supportive evidence was covered up and that contradictory evidence was planted.

The rule of falsifiability essentially boils down to this: Evidence matters. And never assume a claim is true because it can’t be proven wrong.

LOGIC: Arguments for the claim must be logical.

Arguments consist of a conclusion, or claim, and one or more premises which provide evidence, or support, for the claim. In effect, the conclusion is a belief, and the premises are the reasons why we hold that belief. Many arguments also contain hidden premises, or unstated assumptions that are required for the conclusion to be true, and therefore must be identified when evaluating arguments.

There are two types of arguments, which differ in the level of support they provide for the conclusion.

Deductive arguments provide conclusive support for the conclusion. Deductive arguments are valid if the conclusion must follow from the premises, and they are sound if the argument is valid and the premises are true. In order for the conclusion to be considered true, the argument must be both valid and sound.

For example: “Cats are mammals. Dmitri is a cat. Therefore, Dmitri is a mammal.” The conclusion has to follow from the premises, and the premises are true. Because this argument is both valid and sound, we must accept the conclusion.

In everyday language, the word “valid” generally means true. However, in argumentation, valid means the conclusion follows from the premises, regardless of whether the premises are true or not. The following example is valid but unsound: “Cats are trees. Dmitri is a cat. Therefore, Dmitri is a tree.” The conclusion is valid because it follows from the premises, but the conclusion is wrong because of an untrue premise: Cats aren’t trees.

Inductive arguments provide probable support for the conclusion. Unlike deductive arguments, in which a conclusion is guaranteed if the argument is both valid and sound, inductive arguments only provide varying degrees of support for a conclusion. Inductive arguments whose premises are true and provide reasonable support are considered to be strong, while those that do not provide reasonable support for the conclusion are weak.

For example: “Dmitri is a cat. Dmitri is orange. Therefore, all cats are orange.” Even if the premises are true (and they are), a sample size of one does not provide reasonable support to generalize to all cats, making this argument weak.

Logical fallacies are flaws in reasoning that weaken or invalidate an argument. While there are more logical fallacies that can be covered in this guide, some of the more common fallacies include:

- Ad hominem: Attempts to discredit an argument by attacking the source.

- Appeal to (false) authority: Claims that something is true based on the position of an assumed authority.

- Appeal to emotions: Attempts to persuade with emotions, such as anger, fear, happiness, or pity, in place of reason or facts.

- Appeal to the masses: Asserts that a claim is true because many people believe it.

- Appeal to nature: Argues that something is good or better because it’s natural.

- Appeal to tradition: Argues that something is good or true because it’s been around for a long time.

- False choice: Presents only two options when many more likely exist.

- Hasty generalization: Draws a broad conclusion based on a small sample size.

- Mistaking correlation for causation: Assumes that because events occurred together there must be a causal connection.

- Red herring: Attempts to mislead or distract by referencing irrelevant information.

- Single cause: Oversimplifies a complex issue to a single cause.

- Slippery slope: Suggests an action will set off a chain of events leading to an extreme, undesirable outcome.

- Straw man: Misrepresents someone’s argument to make it easier to dismiss.

Consider the following example: “GMO foods are unhealthy because they aren’t natural.” The conclusion is “GMO foods are unhealthy,” and the stated premise is “They aren’t natural.” This argument has a hidden premise, “Things that aren’t natural are unhealthy,” which commits the appeal to nature fallacy. We can’t assume that something is healthy or unhealthy based on its presumed naturalness. (Arsenic and botulinum are natural, but neither are good for us!) By explicitly stating the hidden premise, and recognizing the flaw in reasoning, we see that we should reject this argument.

OBJECTIVITY: The evidence for a claim must be evaluated honestly.

Richard Feynman famously said, “The first principle is that you must not fool yourself, and you are the easiest person to fool.”

Most of us think we’re objective…it’s those who disagree with us who are biased, right?

Unfortunately, every single one of us is prone to flawed thinking that can lead us to draw incorrect conclusions. While there are numerous ways we deceive ourselves, three of the most common errors are:

- Motivated reasoning: Emotionally-biased search for justifications that support what we want to be true.

- Confirmation bias: Tendency to search for, favor, and remember information that confirms our beliefs.

- Overconfidence effect: Tendency to overestimate our knowledge and/or abilities.

The rule of objectivity is probably the most challenging rule of all, as the human brain’s capacity to reason is matched only by its ability to deceive itself. We don’t set out to fool ourselves, of course. But our beliefs are important to us: they become part of who we are and bind us to others in our social groups. So when we’re faced with evidence that threatens a deeply held belief, especially one that’s central to our identity or worldview, we engage in motivated reasoning and confirmation bias to search for evidence that supports the conclusion we want to believe and discount evidence that doesn’t. If you’re looking for evidence you’re right, you will find it. You’ll be wrong…but confident you’re right.

Ultimately the rule of objectivity requires us to be honest with ourselves – which is why it’s so difficult. The problem is, we’re blind to our own biases.

The poster children for violating the rule of objectivity are pseudoscience and science denial, both of which start from a desired conclusion and work backwards, cherry picking evidence to support the belief while ignoring or discounting evidence that doesn’t. There are, however, key differences:

- Pseudoscience is a collection of beliefs or practices that are portrayed as scientific, but aren’t. Pseudoscientific beliefs are motivated by the desire to believe something is true, especially if it conforms to an individual’s existing beliefs, sense of identity, or even wishful thinking; because of this, the standard of evidence is very low. Examples of pseudoscience include various forms of alternative medicine, cryptozoology, many New Age beliefs, and the paranormal.

- Science denial is the refusal to accept well-established science. Denial is motivated by the desire not to believe a scientific conclusion, often because it conflicts with existing beliefs, personal identity, or vested interests; as such, the standard of evidence is set impossibly high. Examples include denying human-caused climate change, evolution, the safety and efficacy of vaccines, and GMO safety.

In both of these cases, believers are so sure they’re right, and their desire to protect their cherished beliefs is so strong, they are unable to see the errors in their thinking.

To objectively evaluate evidence for a claim, pay attention to your thinking process. Look at all of the evidence – even (especially) evidence that contradicts what we want to believe. No denial or rationalization. No cherry picking or ad hoc excuse-making. If the evidence suggests we should change our minds, then that’s what we must do.

It also helps to separate your identity from the belief, or evidence that the belief is wrong will feel like a personal attack. And don’t play on a team…be the referee. If defending your beliefs is more important to you than understanding reality, you will likely fool yourself.

ALTERNATIVE EXPLANATIONS: Other ways of explaining the observation must be considered.

It’s human nature to get attached to an explanation, often because it came from someone we trust or it fits with our existing beliefs. But if the goal is to know the real explanation, we should keep in mind that we might be wrong and consider alternative explanations.

Start by brainstorming other ways to explain your observation. (The more the better!) Ask yourself: What else could be the cause? Could there be more than one cause? Or could it be a coincidence? In short, propose as many (falsifiable) explanations as your creativity allows. Then try to disprove each of the explanations by comprehensively and objectively evaluating the evidence.

Next, determine which of the remaining explanations is the most likely. One helpful tool is Occam’s razor, which states that the explanation that requires the fewest new assumptions has the highest probability of being the right one. Basically, identify and evaluate the assumptions needed for each explanation to be correct, keeping in mind that the explanation requiring the fewest assumptions is most likely to be correct, and that extraordinary claims require extraordinary evidence.

For example, one morning you wake up to find a glass on the floor. Naturally, you want to know how it got there! Maybe it was a burglar? Could it have been a ghost? Or maybe it was the cat?

You look for other signs that someone was in your house, such as a broken window or missing items, but without other evidence the burglar explanation seems unlikely. The ghost explanation requires a massive new assumption for which we currently don’t have proof: the existence of spirits. So while it’s possible that a specter was in your house during the night, a ghost breaking the glass seems even less likely than the burglar explanation, as it requires additional, unproven assumptions, for which there is no extraordinary evidence. Finally, you look up to see your cat watching you clean shards of glass off of the floor and remember seeing him push objects off of tables and counters. You don’t have definitive proof it was the cat…but it was probably the cat.

TENTATIVE CONCLUSIONS: In science, any conclusion can change based on new evidence.

A popular misconception about science is that it results in proof, but scientific conclusions are always tentative. Each study is a piece of a larger picture that becomes more clear as the pieces are put together. However, because there is always more to learn (more pieces of the puzzle yet to be discovered) science doesn’t provide absolute certainty; instead, uncertainty is reduced as evidence accumulates. There’s always the possibility that we’re wrong, so we have to leave ourselves open to changing our minds with new evidence.

Some scientific conclusions are significantly more robust than others. Explanations that are supported by a vast amount of evidence are called theories. Because the evidence for many theories is so overwhelming, and from many different independent lines of research, they are very unlikely to be overturned…although they may be modified to account for new evidence.

Importantly, this doesn’t mean scientific knowledge is untrustworthy. Quite the opposite: science is predicated on the humility of scientists and their willingness and ability to learn. If scientific ideas were set in stone, knowledge couldn’t progress.

Part of critical thinking is learning to be comfortable with ambiguity and uncertainty. Evidence matters, and the more and better our evidence, the more justified we are in accepting a claim. But knowledge is not black or white. It’s a spectrum, with lots of shades of gray. Because we can never be 100% certain, we shouldn’t be overly confident!

Therefore, the goal of evaluating claims and explanations isn’t to prove them true. Disprove those you can, then tentatively accept those left standing proportional to the evidence available, and adjust your confidence accordingly. Be open to changing your mind with new evidence and consider that you might never know for sure.

EVIDENCE: The evidence for a claim must be reliable, comprehensive, and sufficient.

Evidence gives us reasons to believe (or not believe) a claim. In general, the more and better the evidence, the more justified we are in accepting a claim. This requires that we assess the quality of the evidence based on the following considerations:

1. The evidence must be reliable.

Not all evidence is created equal. To determine if the evidence is reliable we must look at two factors:

- How the evidence was collected. A major reason science is so reliable is that it uses a systematic method of collecting and evaluating evidence.

However, scientific studies vary in the quality of evidence they provide. Anecdotes and testimonials are the least reliable and are never considered to be sufficient to establish the truth of a claim. Observational studies collect real-world data and can provide correlational evidence while controlled studies provide causational evidence. At the top of the hierarchy of evidence are meta-analyses and systematic reviews, as they are a combination of other studies and therefore look at the big picture.

- The source of the information. Sources matter, as unreliable sources do not provide reliable evidence.

In general, the most reliable sources are peer-reviewed journals, because as the name suggests, the information had to be approved by other experts before being published. Reputable science organizations and government institutions are also very reliable. The next most reliable sources are high-quality journalistic outlets that have a track record of accurate reporting. Be skeptical of websites or YouTube channels that are known to publish low quality information, and be very wary of unsourced material on social media.

In addition, experts are more reliable than non-experts, as they have the qualifications, background knowledge and experience necessary to understand their field’s body of evidence. Experts can be wrong, of course, but they’re much less likely to be wrong than non-experts. If the experts have reached consensus, it is the most reliable knowledge.

2. The evidence must be comprehensive.

Imagine the evidence for a claim is like a puzzle, with each puzzle piece representing a piece of evidence. If we stand back and look at the whole puzzle, or body of evidence, we can see how the pieces of evidence fit together and the larger picture they create.

You could, either accidentally or purposefully, cherry pick any one piece of the puzzle and miss the bigger picture. For example, everything that’s alive needs liquid water. The typical person can only live for three or four days without water. In fact, water is so essential to life that, when looking for life outside of Earth, we look for evidence of water. But, what if I told you that all serial killers have admitted to drinking water? Or that it’s the primary ingredient in many toxic pesticides? Or that drinking too much water can lead to death?

By selectively choosing these facts (or pieces of the puzzle), we can wind up with a distorted, inaccurate view of water’s importance for life. So if we want to better understand the true nature of reality, it behooves us to look at all of the evidence…including (especially!) evidence that doesn’t support the claim. And be wary of those who use single studies as evidence – they may want to give their position legitimacy, but in science you don’t get to pick and choose. You have to look at all the relevant evidence. If independent lines of evidence are in agreement, or what scientists call consilience of evidence, the conclusion is considered to be very strong.

3. The evidence must be sufficient.

To establish the truth of a claim, the evidence must be sufficient. Claims made without evidence provide no reason to believe, and can be dismissed. In general:

- Claims based on authority are never sufficient. Expertise matters, of course, but they should provide evidence. “Because I said so,” is never enough.

- Anecdotes are never sufficient. Personal stories can be very powerful. But they can also be unreliable. People can misperceive their experiences…and unfortunately, they can also lie.

- Extraordinary claims require extraordinary evidence. Essentially, the more implausible or unusual the claim, the more evidence that’s required to accept it.

As an example, let’s say you own a company, and Jamie works for you. She is an excellent employee, always on time, and always does great work. One day, Jamie is late for work. If Jamie tells you her car broke down, you most likely will believe her. You have no reason not to, although if you’re really strict you may ask for a receipt from the tow truck driver or mechanic. But what if Jamie tells you she’s late because she was abducted by aliens? I don’t know about you, but my standard of evidence just shot through the roof. That’s an extraordinary claim, and she bears the burden of proof. If she tells you that one of the aliens took her to another dimension and forced her to bear offspring, but then reversed time to bring her back without physical changes…again, just speaking for myself, but I’m either going to assume she’s lying or suggest she see a professional.

REPLICABILITY: Evidence for a claim should be able to be repeated.

Replicability (and its related terms) can refer to a range of definitions, but for the purpose of this guide it means the ability to arrive at a similar conclusion no matter who is doing the research or what methodology they use. The rule of replicability is foundational to the self-correcting nature of science, as it helps to safeguard against coincidence, error, or fraud.

The goal of science is to understand nature, and nature is consistent; therefore, experimental results should be too. But it’s also true that science is a human endeavor, and humans are imperfect, and this can lead to fraud or error. For example, in 1998, Andrew Wakefield published a study involving 12 children claiming to have found a link between the MMR (measles, mumps, rubella) vaccine and autism. After scientists all over the world tried unsuccessfully to replicate Wakefield’s findings – with some studies involving millions of children – it was discovered that Wakefield had forged his data as part of a scheme to profit off of a new vaccine. The inability to replicate Wakefield’s study highlights the importance of not relying on any single study.

Conversely, we can be significantly more confident in results that are successfully replicated independently with multiple studies. And, we can be the most confident in conclusions that are supported by multiple independent lines of evidence, especially those from completely different fields of science. For example, because evidence for the theory of evolution comes from many diverse lines, including anatomical similarities, shared developmental pathways, vestigial structures, imperfect adaptations, DNA and protein similarities, biogeography, fossils, etc., scientists have great confidence in accepting that all living things share a common ancestor.

The Take-Home Message

While critical thinking and science literacy can help us make better decisions, these skills are difficult to master. This tool-kit provides a structured, systematic method for evaluating claims, which can protect us from being fooled (or even harmed) by misinformation. Using FLOATER’s seven rules will likely take practice, but it’s worth it!

So stay afloat in the sea of misinformation with FLOATER.

References

Lett, James. 1990. A field guide to critical thinking. SKEPTICAL INQUIRER 14(2): 153-160.

Acknowledgements

This work would not have been possible without Dr. James Lett. His original “A Field Guide to Critical Thinking” has been an invaluable resource to educators such as myself for decades, and I greatly appreciate his support and suggestions while I was writing this update.

I would also like to extend my deepest gratitude to Dr. Matthew P. Rowe, Dr. Marcus Gillespie, and Dr. John Cook for their support, feedback, and guidance.

And a special thanks to Wendy Cook for designing the logo!

Dear Professor

Do you have a textbook on critical thinking for high-school pupils that could also be useful for adults wishing to know more of the subject?

Regards

Digby

Hi Digby,

Thank you for your question! I don’t have a textbook, but it’s something I’ve been thinking about… Glad to know there might be interest!

Melanie

I’m ready to buy your textbook as soon as it’s published!

Digby

Thank you 🙂

You do know a “floater” is slang for a, ahem, turd floating in a toilet bowl, don’t you?

I think any students you introduce your Floater idea to will be laughing at you behind your back.

I’m well aware of the slang, and that’s a reason why I like the name: my students won’t ever forget it. They can’t laugh behind my back – I’m in on the joke and will be laughing with them.

Thanks for the comment.

Melanie

Thank you for a clear and succinct explanation on a substantial topic. It is needed!!

Thank you for the comment and the kind words!

Thank you so much mam for your guidance in critical thinking.

If I would got guidance like you provide in my early schooling days,

I would be at different level now.

It has changed my way of thinking and helped me

I am fasing an issue and having doubt

My question is –

suppose a company or person is making claim about a product or service that it is good,

How to evaluate their claim ?

As it is a product or service, it has only personal reviews and testimonials .

There is no research available particularly about that .

IF it is, it is in broad sense.

example Through coaching you can learn Spanish ,

but not particularly.

. As we know that reviews and testimonials are not reliable source of evidence ,as

they can be paid

or fake or from close friends and family

So, in such condition, how can we evaluate the claim if it is true or not?

Please share your views on this mam.

I would be very grateful to you

Thanking you.

SHUBHAM

Hi Shubham,

Thank you for your comment/question. Before I try and answer, could you provide more information? Are there any particular products you have in mind? For example, evaluating a skin cream will be different than evaluating a smart watch and so on.

Thanks

Melanie

Thank you so much Mam for replying and considering my query.

Highly appreciate it Mam.

As I am student Mam.

Mostly I am facing challenge choosing good coach / mentor / guide for any particular field or subject.

Whenever I face an issue/problem.

I lack to get proper guidance on that.

For example,

I wanted to join content writing class.

So I inquired about it.

I got the top results,

They have experience in field with good reviews and

People testimonials saying good about them.

So I choosed one of them.

But it turned out totally different.

Whatever they were telling before,

were totally different in the class.

And they were telling to write reviews in last day of class to students only.

Despite they have not got any results or benifit form the course ,

still they were writing good reviews from them.

I see these type of reviews and testimonials of people ,

who didn’t got any results from the product or service they are using,

But still they are reviewing it and saying good about it.

This is just one example,

Due to lack of proper Knowledge and guidance.

I mostly get trapped in these type of marketing stuff.

Results in waste of time and resources

And I am not able to get to the right stuff.

Currently due to this,

It has become very hard for me to take any decision,

As fear of failure grabs me.

Hope I have given proper inputs as you said mam.

Waiting for your response.

Thanking you mam.

SHUBHAM

You ask a very difficult question, and one that I’m not sure I can help you with without more information. And maybe even not then!

Speaking from personal experience, when I am interested in a product/service I, too, look at reviews from other customers. But as you articulated, those can be biased, paid for, fake, etc. It’s also not a random sample. I may also look at reviews news organizations, magazines, etc. But again, those can have the same issues. Independent watchdogs can be a great place to go for information if it’s available. I may consider looking at reviews from people I trust, such as academics or even friends and family members. I also prefer to choose products/services that have some type of money back guarantee and a reliable business record.

Not sure if that was helpful! Good luck to you. And thanks for the question!

Ok mam.

Thank you mam for your valuable time.

I

Thanks so much for the insight, I have benefited so much. Do you have a PDF file?

I’m so glad you found it useful! I’m working on a one page summary for my students. Would that help?

Melanie

Dear Professor

Two years ago a friend sent me a PDF of an article from the website sciencedirect.com. The title is “Confronting indifference toward truth: Dealing with workplace bullshit”. (I don’t know if there’s a polite term for bullshit, but most people in Australasia are not shy to use it.) Where bullshit fits in the misinformation/disinformation spectrum I don’t know, but I would think it is surely useful to know when one is being subjected to it — regardless of whether it occurs in the workplace or not.

Anyway, the article’s authors present a four-step framework for dealing with workplace bullshit. They use the appropriate acronym CRAP. Here is my brief summary:

C (comprehend) understanding what is and is not bullshit

R (recognize) detecting bullshit

A (act) dealing with bullshit

P (prevent) ways to stop bullshit

What is of interest here is that under P one of the keys methods described is “encourage critical thinking”. This immediately puts me in mind of FLOATER. Moreover, the L in FLOATER leads me to John Cook’s FLICC. So we have the acronyms CRAP leading to FLOATER leading to FLICC. Is this a legitimate structure worthy of consideration or am I talking rubbish?

Kind regards

Digby

Hi Digby,

Thanks for your comment! In “On Bullshit,” Harry Frankfurt defined bullshit as an attempt at persuading without any concern for the truth. (It’s a great read, and I highly recommend it.) Understanding how to recognize and not fall for BS is therefore an essential part of critical thinking and information literacy.

I personally don’t teach my students the CRAP test (now the CRAAP test) because it focuses on vertical reading; lateral reading is much faster and more reliable. I do use John Cook’s FLICC acronym, as I think it does a great job of summarizing the logical fallacies common in science denial.

Apologies for the rambling but I’m thinking out loud while attempting to answer your question. I think I might have to think some more!

Best

Melanie

I read the piece by Harry Frankfurt — very entertaining but made my head hurt! I think I prefer physics!

Digby

But you’re just at the start of the bullshit literature journey!

This is probably my favorite study ever. It even won an Ig Nobel.

Melanie

Holy cow and Deepak Chopra! I definitely prefer physics!

I’ve had further thoughts about this. As a retired technical writer (with an ancient physics degree) I can assert that it is the job of the technical writer to eliminate bullshit. In my case I had to translate engineer-speak into technician-speak, and if the technician couldn’t understand something he made damn sure I fixed it. Any whiff of bullshit had to be rooted out. As a result of this experience I find myself unable to make bullshit statements. In addition, bullshit statements by others annoy the hell out of me. Perhaps technical writing should be taught at school!

Digby

My graduate degree is in ecology, but my major professor would tell me to “write like I had to pay for every word.” As a result, I get really annoyed with even basic flowery language, let alone bullshit. And yes, technical writing should be taught at school!

Thanks for making me laugh!

The link provided at the top of this article is broken: it refers to the other article you wrote for S.I. I think this is where it should go: https://skepticalinquirer.org/2022/02/a-life-preserver-for-staying-afloat-in-a-sea-of-misinformation/

Thanks for letting me know. It’s fixed!

Melanie

Great articles! I teach critical thinking and have picked up a few great ideas here! I’m looking forward to the presentation.

Thanks for the comment and kind words! I’m a huge fan of critical thinking education 🙂

Melanie

Pingback: Boia do Pensamento Crítico

Hi Melanie! Just saw you on Seth’s show (The Thinking Atheist)… I saw the FLOATER poster, and thought it would be great to print that out and post it on a wall — partly for myself, and partly for others who might come by. I don’t have a classroom or anything, so it seems hard to justify buying a poster (though if I ever wanted a full-sized one, I’d absolutely get it from you rather than taking it to a print shop myself, personally), so… that’s why I, too, would love a PDF. I also figure it might be good for others, though, for whom the cost of the poster might be prohibitive, yet might have an ability to make even a somewhat larger printout… folks in other places of the world, especially. I don’t know what Alice’s needs were, but for me, that’s why I’d want a PDF. I hope that’s helpful information!

Hi David,

Thanks so much for reaching out and the kind comments! You raise an interesting idea, and one I hadn’t considered…although I’m also not entirely sure what you mean. Can you explain more so that I can try to help?

Thanks again!

Melanie

Pingback: Mike’s News Sep 11 | Mike's News

Pingback: Het belang van kritisch denken in een wereld vol misleiding – Autsider